What's Interval Partitioning

PS_TXN is an interval partitioned table. Interval partitioning is an enhancement to range partitioning in Oracle 11g and it automatically creates time-based partitions as new data is added.[2,3]

We have found out PS_TXN is an interval partitioned table by accident. Checking Oracle alert log (i.e., <adr_home>/diag/rdbms/ems3266/ems3266/alert/log.xml),[4] the following entry was found:

TABLE FUSION.PS_TXN: ADDED INTERVAL PARTITION SYS_P23979 (401) VALUES LESS THAN (TO_DATE(' 2014-01-28 00:00:00', 'SYYYY-MM-DD HH24:MI:SS', 'NLS_CALENDAR=GREGORIAN'))

To verify that PS_TXN is truly an interval partitioned table, you can retrieve its table definition as follows:[5]

SQL> select dbms_metadata.get_ddl('TABLE', 'PS_TXN', 'FUSION') from dual;

CREATE TABLE "FUSION"."PS_TXN"

( "ID" NUMBER(20,0) NOT NULL ENABLE,

"PARENTID" NUMBER(20,0),

"COLLID" NUMBER(10,0),

"CONTENT" BLOB,

"CREATION_DATE" DATE DEFAULT SYSDATE,

CONSTRAINT "PS_TXN_PK" PRIMARY KEY ("COLLID", "ID") USING INDEX PCTFREE 10 INITRANS 2 MAXTRANS 255

STORAGE(

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "FUSION_TS_TX_IDX" GLOBAL PARTITION BY HASH ("COLLID")

(

PARTITION "SYS_P16301" TABLESPACE "FUSION_TS_TX_IDX" ,

PARTITION "SYS_P16302" TABLESPACE "FUSION_TS_TX_IDX" ,

<snipped>

PARTITION "SYS_P16364" TABLESPACE "FUSION_TS_TX_IDX"

) ENABLE

) PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255

STORAGE(BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "FUSION_TS_TX_DATA"

LOB ("CONTENT") STORE AS SECUREFILE (

ENABLE STORAGE IN ROW CHUNK 8192

CACHE NOLOGGING COMPRESS MEDIUM KEEP_DUPLICATES

STORAGE( BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT))

PARTITION BY RANGE ("CREATION_DATE") INTERVAL (NUMTODSINTERVAL(1,'DAY'))

PARTITION "P_0" VALUES LESS THAN (TO_DATE(' 2012-12-23 00:00:00', 'SYYYY-MM-DD HH24:MI:SS', (

'NLS_CALENDAR=GREGORIAN')) SEGMENT CREATION IMMEDIATE

PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255

NOCOMPRESS LOGGING

STORAGE(INITIAL 131072 NEXT 131072 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "FUSION_TS_TX_DATA"

LOB ("CONTENT") STORE AS SECUREFILE (

TABLESPACE "FUSION_TS_TX_DATA"

ENABLE STORAGE IN ROW CHUNK 8192

CACHE NOLOGGING COMPRESS MEDIUM KEEP_DUPLICATES

STORAGE(INITIAL 131072 NEXT 131072 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT

)

)

)

Note that only the first partition (i.e., P_0) was specified at beginning and that interval's high value (see below for explanation) is "2012-12-23 00:00:00". It also specifies that above the transition point of December 23, 2012, partitions will be created with a width of one day.

The normal way to reference a specific partition is to use the partition (partition_name) in the query. For example, we have found 13187 rows in our newly created partition (i.e., SYS_P23979).

CREATE TABLE "FUSION"."PS_TXN"

( "ID" NUMBER(20,0) NOT NULL ENABLE,

"PARENTID" NUMBER(20,0),

"COLLID" NUMBER(10,0),

"CONTENT" BLOB,

"CREATION_DATE" DATE DEFAULT SYSDATE,

CONSTRAINT "PS_TXN_PK" PRIMARY KEY ("COLLID", "ID") USING INDEX PCTFREE 10 INITRANS 2 MAXTRANS 255

STORAGE(

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "FUSION_TS_TX_IDX" GLOBAL PARTITION BY HASH ("COLLID")

(

PARTITION "SYS_P16301" TABLESPACE "FUSION_TS_TX_IDX" ,

PARTITION "SYS_P16302" TABLESPACE "FUSION_TS_TX_IDX" ,

<snipped>

PARTITION "SYS_P16364" TABLESPACE "FUSION_TS_TX_IDX"

) ENABLE

) PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255

STORAGE(BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "FUSION_TS_TX_DATA"

LOB ("CONTENT") STORE AS SECUREFILE (

ENABLE STORAGE IN ROW CHUNK 8192

CACHE NOLOGGING COMPRESS MEDIUM KEEP_DUPLICATES

STORAGE( BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT))

PARTITION BY RANGE ("CREATION_DATE") INTERVAL (NUMTODSINTERVAL(1,'DAY'))

PARTITION "P_0" VALUES LESS THAN (TO_DATE(' 2012-12-23 00:00:00', 'SYYYY-MM-DD HH24:MI:SS', (

'NLS_CALENDAR=GREGORIAN')) SEGMENT CREATION IMMEDIATE

PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255

NOCOMPRESS LOGGING

STORAGE(INITIAL 131072 NEXT 131072 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "FUSION_TS_TX_DATA"

LOB ("CONTENT") STORE AS SECUREFILE (

TABLESPACE "FUSION_TS_TX_DATA"

ENABLE STORAGE IN ROW CHUNK 8192

CACHE NOLOGGING COMPRESS MEDIUM KEEP_DUPLICATES

STORAGE(INITIAL 131072 NEXT 131072 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT

)

)

)

Query by Partition Name

The normal way to reference a specific partition is to use the partition (partition_name) in the query. For example, we have found 13187 rows in our newly created partition (i.e., SYS_P23979).

$ sqlplus / as sysdba;

SQL>spool /tmp/partition.txt

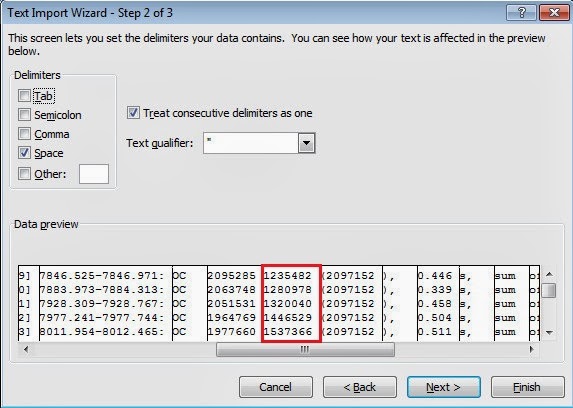

SQL> select * from FUSION.PS_TXN partition (SYS_P23979);

ID PARENTID COLLID

---------- ---------- ----------

CONTENT

--------------------------------------------------------------------------------

CREATION_DATE

------------------

1 -1 20150129

ACED0005737200246F7261636C652E6A626F2E7365727665722E444253657269616C697A65722441

4D526F77E6FDC51C118C84070300034A000A6D5065727369737449644C0006746869732430740020

27-JAN-14

<snipped>

13187 rows selected.

Partitions Created Automatically

Inserting a row that has a date later than December 23, 2012 (i.e., our initial partition's high value) would raise an error with normal range partitioning. However, with interval partitioning, Oracle 11g determines the high value of the defined range partitions, called the transition point, and creates new partitions for data that is beyond that high value.

If you have a benchmark that will be run repeatedly for a long time, controlling the growth of your database is important. To find out how many partitions were added in PS_TXN table, you can do the following:

SQL> select table_name, partition_name, partition_position, high_value from DBA_TAB_PARTITIONS where TABLE_NAME='PS_TXN';

------------------------------ ------------------------------ ------------------

HIGH_VALUE

--------------------------------------------------------------------------------

-

PS_TXN P_0 1

TO_DATE(' 2012-12-23 00:00:00', 'SYYYY-MM-DD HH24:MI:SS', 'NLS_CALENDAR=GREGORIA

PS_TXN SYS_P16387 2

TO_DATE(' 2012-12-24 00:00:00', 'SYYYY-MM-DD HH24:MI:SS', 'NLS_CALENDAR=GREGORIA

<snipped>

PS_TXN SYS_P23972 214

TO_DATE(' 2014-01-25 00:00:00', 'SYYYY-MM-DD HH24:MI:SS', 'NLS_CALENDAR=GREGORIA

PS_TXN SYS_P23979 215

TO_DATE(' 2014-01-28 00:00:00', 'SYYYY-MM-DD HH24:MI:SS', 'NLS_CALENDAR=GREGORIA

215 rows selected.

Conclusions

Oracle Fusion Applications use the PS_TXN table to store the intermediate processing state. When there are many concurrent users, this table receives a high number of inserts and could suffer from contention issues.

- To detect this contention issue

- Check the wait event "enq: HW contention" in the AWR report of the database.

- To alleviate this contention issue

- Follow the steps outlined in note ID 1444959.1 in My Oracle Support

References

- How to Tune the PS_TXN Table in the FUSION Schema

- More Partitioning Choices

- Oracle Interval Partitioning Tips

- Location of alert log in 11g

- How to get Oracle create table statement in SQL*Plus

- Writing sqlplus output to a file

- Ask Tom "Interval partitioning" - Oracle

- Interval partitioning - Oracle FAQ

- How to check the Oracle database version