- Large Live Data Set

- Frequency of Objects Promoted from Young Gen (or Nursery) to Old Gen

- Frequency of Collections

Importance of Estimating Live Data Size

The mark-and-sweep algorithm is the basis of all commercial garbage collectors in JVMs (including JRockit) today.[2,3] Here is how it works:[2]

When the system starts running out of memory (or some other such trigger) the GC is fired. It first enumerates all the roots and then starts visiting the objects referenced by them recursively (essentially travelling the nodes in the memory graph). When it reaches an object it marks it with a special flag indicating that the object is reachable and hence not garbage. At the end of this mark phase it gets into the sweep phase. Any object in memory that is not marked by this time is garbage and the system disposes it.As can be inferred from the above algorithm, the computational complexity of mark and sweep is both a function of the amount of live data on the heap (for mark) and the actual heap size (for sweep). If your application has a large live data set, it could be a garbage collection bottleneck. Any garbage collection algorithm could break down given too large an amount of live data. So, it's important to estimate the size of live data set in your Java applications for any performance evaluation.

How to Estimate the Live Data Size

To estimate the live data size, we have used:

- -Xverbose:gc flag

<start>-<end>: <type> <before>KB-><after>KB (<heap>KB), <time> ms, sum of pauses <pause> ms.

<start> - start time of collection (seconds since jvm start).

<type> - OC (old collection) or YC (young collection).

<end> - end time of collection (seconds since jvm start).

<before> - memory used by objects before collection (KB).

<after> - memory used by objects after collection (KB).

<heap> - size of heap after collection (KB).

<time> - total time of collection (milliseconds).

<pause> - total sum of pauses during collection (milliseconds).

>grep "\[OC#" jrockit_gc.log >OC.txtFor example, you can find the following sample lines in OC.txt:

[INFO ][memory ][Sat Jan 4 09:02:11 2014][1388826131638][21454] [OC#159] 7846.525-7846.971:

OC 2095285KB->1235482KB (2097152KB), 0.446 s, sum of pauses 389.247 ms, longest pause 389.247 ms.

...

OC 2095285KB->1235482KB (2097152KB), 0.446 s, sum of pauses 389.247 ms, longest pause 389.247 ms.

...

[INFO ][memory ][Sat Jan 4 10:10:55 2014][1388830255584][21454] [OC#251] 11970.727-11971.142:

OC 1849047KB->1509435KB (2097152KB), 0.415 s, sum of pauses 379.199 ms, longest pause 379.199 ms.

OC 1849047KB->1509435KB (2097152KB), 0.415 s, sum of pauses 379.199 ms, longest pause 379.199 ms.

For better estimation, you want to consider using statistics only from the steady state. For example, our benchmark was run with following phases:

- Ramp-up: 7800 secs

- Steady: 4200 secs

In the above sample output, only the first and the last OC event were displayed (see the timestamp in red). Finally, live data size is estimated to be the average memory size after OC (shown in blue).

Using Excel to Compute Average

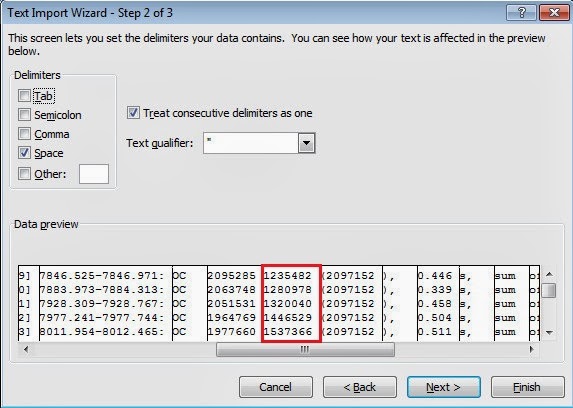

You can use Java code to parse the GC log and compute average memory size after Old Collections. An alternative way is using Excel, which is demonstrated here.

Before we start, we need to clean up data by replacing the following tokens:

"KB"

"->"

with spaces.

Then you open OC.txt and specify space as the delimiter for field extraction.

Select the "memory size after collection" field as shown above and compute the average as shown below:

Finally, the estimated live data size is 1359 MB.

Conclusions

If you have found that your live data set is large, you can improve your application's performance by:

- Reducing it by improving your codes

- For example, you should avoid object pooling which can lead both to more live data and to longer object life spans.

- Giving your application a larger heap

- As the complexity of a well written GC is mostly a function of the size of the live data set, and not the heap size, it is not too costly to support larger heaps for the same amount of live data. This has also the added benefit of it being harder to run into fragmentation issues and of course, implicitly, the possibility to store more live data.[3]

Finally, what size of live data set is considered large. It actually depends on what GC collector you choose. For example, if you choose JRockit Real Time as your garbage collector, practically all standard application, with live data sets up to about 30 to 50 percent of the heap size, can be successfully handled by it with pause times shorter than, or equal to, the supported service level.[3] However, if the live data size is larger than 50 percent, it could be considered too large.

References

- JRockit: Out-of-the-Box Behavior of Four Generational GC's (Xml and More)

- Back To Basics: Mark and Sweep Garbage Collection

- Oracle JRockit- The Definitive Guide

- JRockit: Parallel vs Concurrent Collectors (Xml and More)

- JRockit: All Posts on "Xml and More" (Xml and More)

- JRockit R27.8.1 and R28.3.1 versioning

- Note that R28 went from R28.2.9 to R28.3.1—these are just ordinary maintenance releases, not feature releases. There is zero significance to the jump in minor version number.

No comments:

Post a Comment