|

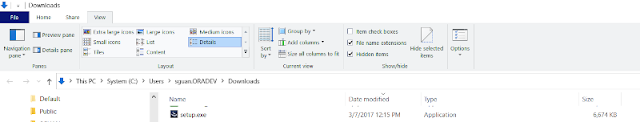

Figure 1. Provisioning an OAC instance |

Subjects of Learning OAC

- Describe the editions of Oracle Analytics Cloud

- Describe the solutions applicable for each OAC edition

- Identify the pre-requisites for OAC

- Explain the concept of a compute shape

Video 1. Create a Service with Oracle Analytics Cloud (YouTube link)

Oracle Analytics Cloud Products

Oracle Analytics Cloud offers you three product options:[4]

Differences Between Products

The main difference between Oracle Analytics Cloud, Oracle Analytics Cloud Subscription, and Oracle Analytics Cloud - Classic is the way you deploy and manage your services on Oracle Cloud.

- Editions

- Service Management

- Infrastructure

Editions

Several editions are currently available: Professional and Enterprise. The features available with each edition depend on the product option and regions accessible to you. Read [4] for details especially for the availability of different products based on dates and regions.

Service Management

| Service Management | Oracle Analytics Cloud | Oracle Analytics CloudSubscription | Oracle Analytics Cloud - Classic |

|---|---|---|---|

| Managed by You (Oracle User) |  You manage the service lifecycle and configuration, and have SSH access to the compute node VM. | ||

| Oracle–Managed |  Oracle provides you with lifecycle management and configuration. You can log service requests to Oracle Cloud support to request service updates. |  | |

Customer Responsibility | |||

| Manage users and roles |  |  |  |

| Create and size service |  |  |  |

| Create database cloud service |  | ||

| Administer database cloud service |  | ||

| Back up and restore services | Oracle schedules and manages backups | Oracle schedules and manages backups |  |

| Patch services | Oracle schedules and applies patches | Oracle schedules and applies patches |  |

| Patch operating system | Oracle schedules and applies patches | Oracle schedules and applies patches |  |

| Start and stop services |  | ||

| Pause and resume services |  | ||

| Monitor services | Oracle has direct access to diagnostic logs for troubleshooting issues | Oracle has direct access to diagnostic logs for troubleshooting issues |  |

Infrastructure

| Infrastructure | Oracle Analytics Cloud | Oracle Analytics CloudSubscription | Oracle Analytics Cloud - Classic |

|---|---|---|---|

| Oracle Cloud Infrastructure (Gen 2) |  | ||

| Oracle Cloud Infrastructure (Gen 1) |  |  | |

| Oracle Cloud Infrastructure Classic |  | ||

Oracle Cloud Infrastructure Identity and Access Management- Identity Domains |  Available on Oracle Cloud Infrastructure (Gen 2) to new customers in some Oracle Cloud regions. | ||

Oracle Identity Cloud Service |  |  |  |

Load Balancer |  An Oracle-managed load balancer is automatically created and configured for your service. |  An Oracle-managed load balancer is automatically created and configured for your service. |  When you enable Oracle Identity Cloud Service as the identity provider, an Oracle-managed load balancer is created and configured automatically for your service. |

Cloud Storage Required |  Uses Oracle Cloud Infrastructure Object Storage— A storage bucket is automatically created for your service. |  Uses Oracle Cloud Infrastructure Object Storage— A storage bucket is automatically created for your service. |  Uses Oracle Cloud Infrastructure Object Storage Classic — You can create the object storage container either before or while you set up your service. |

Oracle Database Cloud Service Required |  You must set up a database service for Oracle Analytics Cloud - Classic schemas and organize a back up schedule. | ||

Size Deployment by Shape |  Various Oracle Compute Unit (OCPU) sizing options. |  Various Oracle Compute Unit (OCPU) sizing options. |  Standard and high memory shapes. The list of available shapes may vary by region. |

Size Deployment by Number of Users |  Only on Oracle Cloud Infrastructure (Gen 2). |  | |

Scale Up and Scale Down |  |  | |

Availability Domains |  Each region has multiple isolated availability domains, with separate power and cooling. The availability domains within a region are interconnected using a low-latency network. When you create a service, you can select the region where you want to deploy the service and Oracle automatically selects an availability domain. |  Each region has multiple isolated availability domains, with separate power and cooling. The availability domains within a region are interconnected using a low-latency network. When you create a service, you can select the region where you want to deploy the service and Oracle automatically selects an availability domain. |

Oracle Analytics Cloud - Professional Edition

With Professional Edition, you can:

- Take control of your data

- Create processes for business analytics application and data collection

- Discover insights on the data that you provide

- Prepare data through interactive data flows

- Explore data through grammar-based visualization

- Coordinate business analytics within your department or organization

- Use the Oracle Analytics Day by Day mobile application

Oracle Analytics Cloud - Enterprise Edition

Enterprise Edition offers all the features in Professional Edition and in addition, you can:

- Build data models, reports, and analytic dashboards in an enterprise business intelligence environment

- Design and publish pixel-perfect reports from your enterprise data

- Migrate content from your existing on-premises environment

- Perform a sensitivity analysis to test various data scenarios

- Use the Oracle Analytics Day by Day mobile application

- Maintain live and optimized connectivity to on-premises data warehouses